Lmql AI Programming Language

What is lmql.ai?

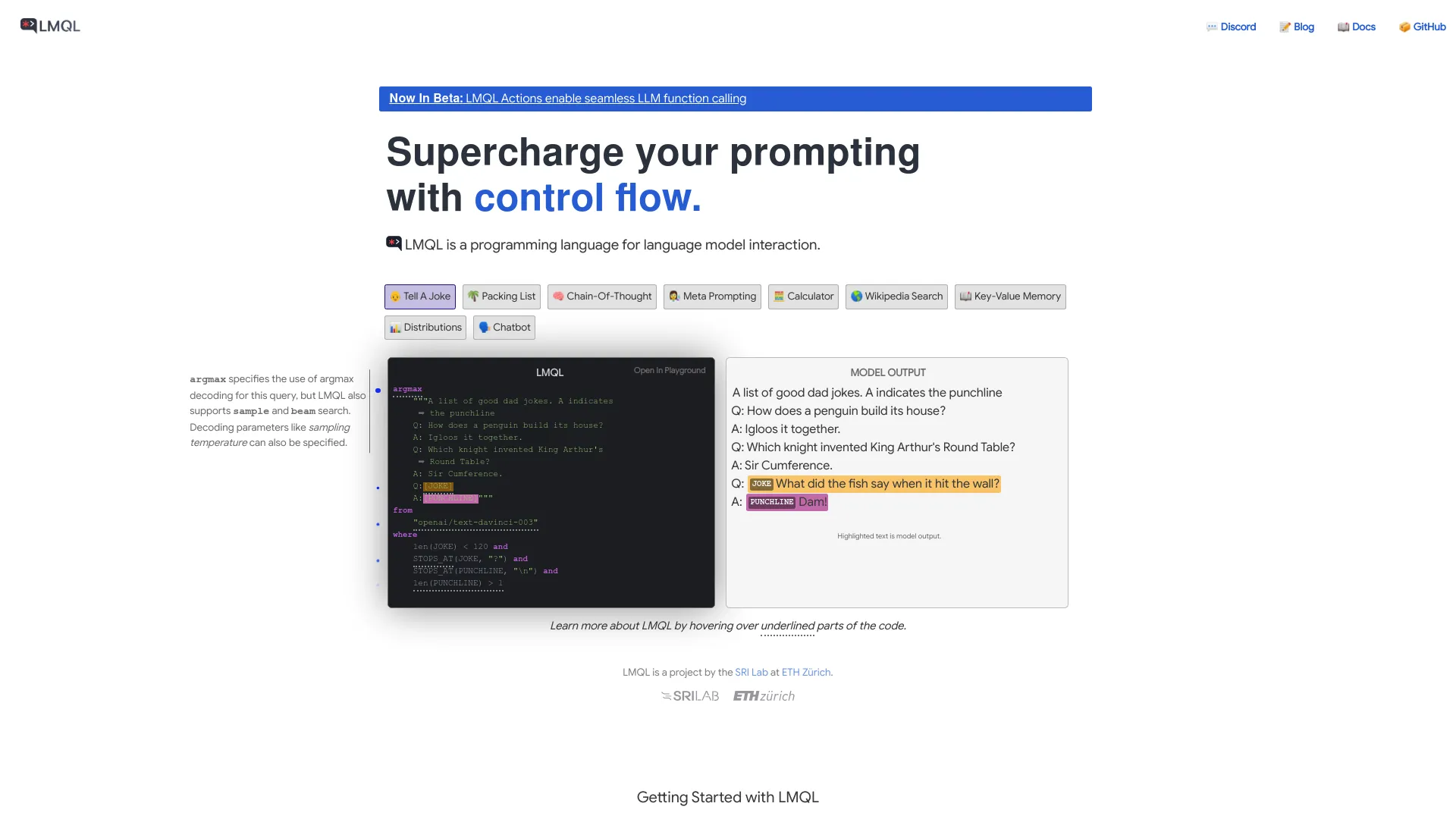

LMQL is a specialized programming language tailored for interacting with large language models (LLMs). It offers a comprehensive and adaptable prompting system comprising types, templates, constraints, and an optimizing runtime. This facilitates the creation of intricate prompts for LLMs and streamlines the interaction process. Developed by the SRI Lab at ETH Zurich, LMQL enables procedural programming with prompts, enabling the creation of structured and reusable code for LLMs.

The language is compatible with various backends such as Hugging Face’s Transformers and OpenAI API, aiming to ensure the portability of LLM code across different platforms. Emphasizing safety and transparency, LMQL incorporates features to prevent the misuse of generative AI. Users can exert control over the information provided by AI and the manner in which it is delivered.

For individuals keen on exploring LMQL, a browser-based Playground IDE is available on the project's website. This tool offers a platform for writing and testing LMQL code, facilitating experimentation and development.

How can I get started with lmql.ai?

To begin with LMQL, follow these simple steps:

Installation: You have two options for installation. You can either install LMQL locally on your machine or utilize the web-based Playground IDE accessible on their website. If you intend to utilize self-hosted models via Transformers or llama.cpp, local installation is required.

Write Your First Query: Start with a basic "Hello World" LMQL query. For instance:

```

"Say 'this is a test':[RESPONSE]" where len(TOKENS(RESPONSE)) < 25

```

This program comprises a prompt statement and a constraint clause that restricts the response length.Advancing Further: As you gain proficiency, you can introduce complexity to your queries, employ different decoding algorithms, and delve into control flow and branching behavior for more intricate prompt construction.

For comprehensive guidance and examples, consult the Getting Started section in the LMQL documentation. It serves as an invaluable resource for learning how to compose and execute your initial program, as well as for understanding the diverse features of LMQL.

What are some common lmql.ai patterns?

Common patterns in LMQL encompass several key elements:

Prompt Statements: These are crafted using top-level strings in Python, incorporating template variables like [RESPONSE], which are automatically filled by the model.

Constraints: Utilizing the where clause, you can define constraints and data types for the generated text, guiding the model's reasoning process and constraining intermediate outputs.

Control Flow: LMQL supports traditional algorithmic logic, enabling you to prompt an LLM on program variables alongside standard natural language prompting.

Decoding Algorithms: Various decoding algorithms like argmax, sample, beam search, and best_k are supported by the language to execute your program effectively.

Multi-variable Templates: These enable the definition of multiple input and output variables within a single prompt, enhancing overall likelihood across multiple calls.

Conditional Distributions: Conditional distributions can be employed to manage interaction with the LLM more efficiently.

Generations API: A straightforward Python API facilitates inference without the need to write LMQL code manually.

Here's an illustrative example of an LMQL pattern integrating some of these elements:

"Greet LMQL:[GREETINGS]\n" where stops_at(GREETINGS, ".") and not "\n" in GREETINGSif "Hi there" in GREETINGS: "Can you reformulate your greeting in the speech of Victorian-era English: [VIC_GREETINGS]\n" where stops_at(VIC_GREETINGS, ".")"Analyse what part of this response makes it typically Victorian:\n"for i in range(4): "-[THOUGHT]\n" where stops_at(THOUGHT, ".")"To summarize:[SUMMARY]"This pattern exemplifies the utilization of prompt statements, constraints, control flow, and multi-variable templates.

For more comprehensive patterns and examples, you can explore LMQL's provided resources such as their documentation and the Example Showcase.

How much does lmql.ai cost?

LMQL.ai offers various pricing options:

Free Tier: A free option appears to be available, potentially offering limited features or usage.

Subscription: A subscription model is mentioned, priced at $5 per month.

For the most precise and current pricing details, it is recommended to visit the official LMQL website or directly contact their support. They should provide comprehensive information on the features included in each pricing tier, as well as any additional costs or alternative plans available.

What are the benefits of lmql.ai?

LMQL (Large Language Model Query Language) is tailored to facilitate secure interactions with potent language models like GPT-4. Here are some notable advantages of LMQL in comparison to natural language prompts:

Targeted, Unambiguous Queries: LMQL provides a declarative syntax enabling users to craft focused and logically precise queries. In contrast to natural language, which can be ambiguous, LMQL leaves no room for misinterpretation. For example:

- Natural language: “What is the capital of France?”

- LMQL: query(capital_city(France))Conversational Consistency and Memory: LMQL fosters conversational consistency by allowing users to reference prior interactions. For instance:

- Natural language: “What did you previously say the capital of France was?”

- LMQL: query(previous(capital_city(France)))Integrated Fact-Checking: Claims can be corroborated against credible sources using LMQL. For example:

- Natural language: “Are vaccines safe?”

- LMQL: verify(claim="Vaccines are safe", sources=["CDC", "WHO"])Transparent Citations and Qualifications: LMQL empowers users to govern how they query and receive information from AI. It ensures transparency by furnishing citations and qualifications for the generated responses.

Mitigating AI Risks: As AI capabilities evolve, LMQL embodies a human-centered approach to ensure safety and ethics. By thwarting the misuse of generative AI, LMQL harnesses AI's potential for benevolent purposes.

Remember, unchecked AI poses societal risks, but LMQL offers a pathway toward safer and more verifiable interactions with language models.

What is LMQL and how does it benefit LLM prompting?

LMQL is a programming language specifically designed for interacting with Large Language Models (LLMs). It provides a robust and modular prompting system utilizing types, templates, constraints, and an optimizing runtime. This design allows users to construct intricate prompts for LLMs and simplifies the interaction process. LMQL was developed by the SRI Lab at ETH Zurich to enable procedural programming with prompts, facilitating the creation of structured and reusable code for LLMs.

LMQL offers significant benefits for LLM prompting, including:

- Targeted Queries: LMQL allows for the creation of focused, logically precise queries, reducing ambiguity compared to natural language.

- Conversational Memory: LMQL supports referencing previous interactions to maintain conversational consistency.

- Fact-Checking: It enables integrating fact-checking by corroborating claims against credible sources.

- Transparency: LMQL ensures control over the information provided by AI and delivers transparent citations and qualifications.

How does LMQL support nested queries and what are their benefits?

LMQL now supports nested queries, bringing procedural programming to prompting. Nested queries allow users to modularize local instructions and reuse prompt components, making it easier to manage complex interactions with LLMs.

The benefits of using nested queries in LMQL include:

- Modularity: By modularizing prompts, developers can create reusable components, simplifying code maintenance and updates.

- Enhanced Organization: Nested queries enable a structured approach to prompt construction, making it easier to organize and manage larger projects.

- Flexibility: Developers can build upon existing query components, allowing for flexible adaptation to different use cases without starting from scratch.

- Improved Efficiency: Reusing prompt components can reduce redundancy and increase the efficiency of code execution.

Which backends are compatible with LMQL and how does it ensure portability across them?

LMQL is designed to be compatible with several LLM backends, ensuring portability of LLM code across these platforms. The backends supported by LMQL are:

- llama.cpp

- OpenAI

- Hugging Face's Transformers

LMQL ensures portability across these backends by allowing users to switch between them with just a single line of code. This flexibility means that developers can choose the most suitable backend for their specific application needs or constraints, without having to rewrite the LMQL code. This approach streamlines the development process and enhances the adaptability of LLM applications.