AI Music Generation Tool

What is the main use case for musci?

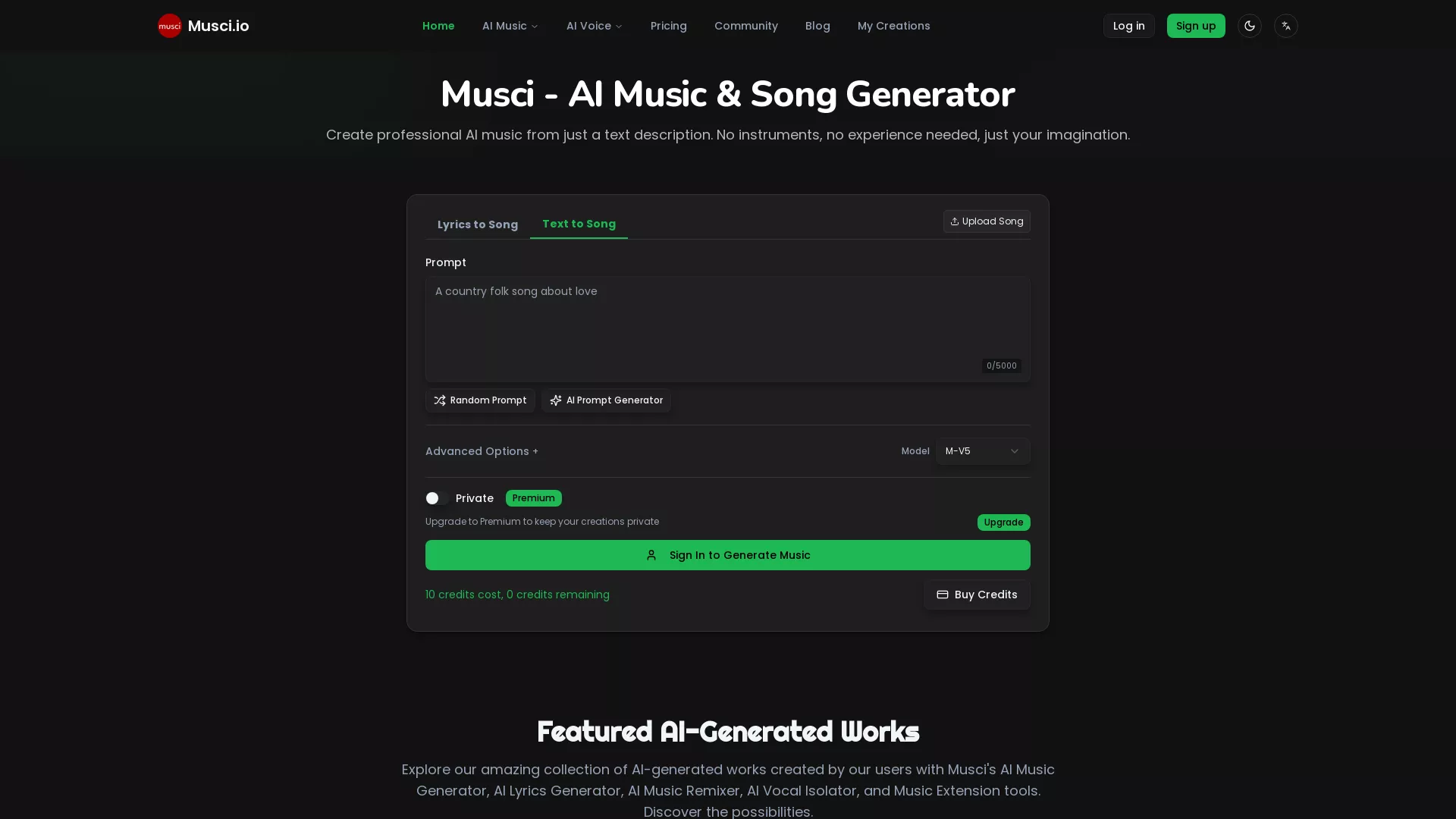

Musci.io solves the content creator's dilemma: needing original music without hiring composers or risking copyright strikes. YouTubers use it for intro sequences, podcast hosts generate episode themes, and game developers score indie projects. The tool bridges the gap between stock music libraries (limited, overused) and custom composition (expensive, time-consuming). Users type descriptions like "upbeat electronic for tech review" and get production-ready tracks with full commercial rights.

What impact has musci.io had on its users or the industry?

Musci.io democratizes music production for creators who previously couldn't afford custom soundtracks. Small businesses now score promotional videos in-house instead of outsourcing. Independent filmmakers prototype scenes with temp music that actually fits their vision. The platform has processed thousands of generations, helping users avoid copyright claims while maintaining creative control. It's shifting music creation from a gatekeeper model to an accessible utility.

What model of AI does musci use?

The platform leverages transformer-based audio generation models trained on diverse musical datasets. These models understand both musical structure (rhythm, harmony, progression) and semantic relationships between text descriptions and sonic characteristics. The system uses latent diffusion techniques to synthesize audio waveforms, allowing control over genre, mood, instrumentation, and duration through natural language inputs.

.webp)