AI Video Motion Tracking

What is omnimotion.github.io?

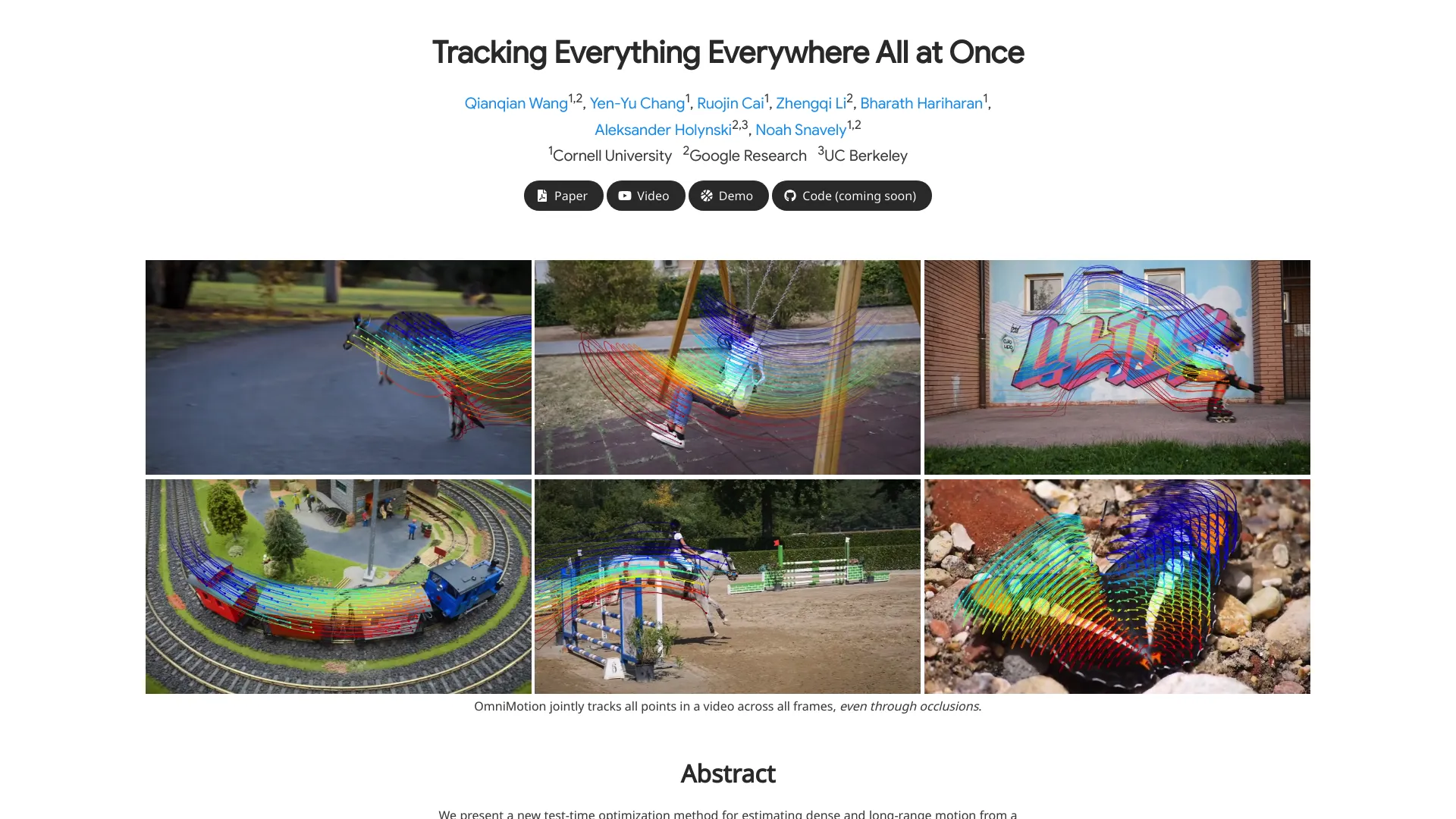

OmniMotion introduces an innovative approach to motion representation, addressing limitations found in traditional methods such as pairwise optical flow. Unlike optical flow, which can encounter challenges with occlusions and inconsistencies, OmniMotion utilizes a quasi-3D canonical volume to represent videos. This approach facilitates pixel-wise tracking by establishing bijections between local and canonical spaces, ensuring accurate and consistent tracking even in complex scenarios involving occlusions. OmniMotion supports diverse combinations of camera and object motions, maintaining global consistency throughout. Implemented in PyTorch, this method is openly accessible on GitHub for further exploration and integration into various applications.

How does omnimotion.github.io work?

OmniMotion introduces a novel approach to motion representation and tracking in videos, focusing on several key innovations:

Canonical Volume Representation:

OmniMotion utilizes a quasi-3D canonical volume to represent video sequences. This volume serves as a consistent reference capturing motion information across frames, ensuring global consistency in tracking.Pixel-Wise Tracking:

The method establishes bijections between local space (individual frames) and canonical space (the volume). By mapping each pixel's motion from local to canonical space, OmniMotion achieves pixel-wise tracking, delivering accurate and consistent results even in the presence of occlusions.Handling Occlusions:

OmniMotion excels at tracking through occlusions by maintaining a global representation. This capability allows it to handle complex scenarios where objects may move behind each other, ensuring robust tracking performance.Implementation:

Implemented in PyTorch, OmniMotion's method and codebase are openly available on GitHub. The repository includes detailed instructions, enabling developers to explore technical specifics, examples, and integration possibilities.

For more detailed technical insights and practical examples, you can visit the OmniMotion GitHub repository.

What are the benefits of omnimotion.github.io?

OmniMotion offers several distinct advantages:

Accurate Motion Tracking:

OmniMotion achieves precise tracking of object motion across video frames. Unlike traditional pairwise optical flow methods, it maintains global consistency, ensuring reliable tracking even in challenging scenarios like occlusions.Consistent Results:

By utilizing a quasi-3D canonical volume to represent video sequences, OmniMotion ensures consistent tracking performance. This consistency is crucial for applications such as video stabilization and object tracking, where reliable motion representation is paramount.Handling Occlusions:

OmniMotion excels at tracking objects that may move behind each other or temporarily disappear from view. Its global representation approach allows it to handle complex scenarios effectively, maintaining accurate tracking throughout.Open-Source Implementation:

Implemented in PyTorch, OmniMotion is freely available on GitHub. This open-source nature enables developers to explore the codebase, experiment with different functionalities, and seamlessly integrate OmniMotion into their own projects.

For those interested in leveraging advanced motion tracking capabilities with robust handling of occlusions, OmniMotion provides a valuable and accessible solution through its open-source implementation in PyTorch.

What are the limitations of omnimotion.github.io?

While OmniMotion offers significant advantages, it's important to consider its limitations:

Computational Cost:

OmniMotion involves intensive computations due to its quasi-3D canonical volume representation. Depending on the application, this computational overhead may impact real-time performance.Memory Requirements:

The use of a canonical volume to store global motion information requires significant memory. For long video sequences or high-resolution videos, managing memory usage effectively becomes crucial.Initialization Challenges:

Correctly initializing the canonical volume is critical for optimal tracking performance. Improper initialization can lead to suboptimal results in motion tracking.Scene Complexity:

While OmniMotion excels at handling occlusions, highly complex scenes with rapid motion changes may still pose challenges. Occlusions involving multiple layers of objects can potentially introduce inaccuracies in tracking.Lack of Fine Details:

The global representation used by OmniMotion prioritizes consistency over fine pixel-level details. For applications where precise pixel-level information is essential, other methods that focus on detail preservation might be more suitable.

Every technique, including OmniMotion, comes with trade-offs. It's crucial to assess these limitations against your specific use case and requirements to determine the suitability of OmniMotion for your application.

How to get started with omnimotion.github.io?

To get started with OmniMotion, follow these steps:

Clone the Repository:

Visit the OmniMotion GitHub repository.

Clone the repository to your local machine using Git:

```

git clone https://github.com/omnimotion/omnimotion.git

```Install Dependencies:

Ensure you have Python and PyTorch installed on your system.

Navigate to the cloned repository:

```

cd omnimotion

```

Install the required Python packages:

```

pip install -r requirements.txt

```Explore the Code:

Dive into the codebase to understand OmniMotion's workings.

Examine implementation details such as the quasi-3D canonical volume representation and pixel-wise tracking.Run Examples:

The repository includes example scripts.

Run them to observe OmniMotion in action:

```

python examples/demo.py

```Experiment and Adapt:

Customize OmniMotion to suit your specific requirements.

Adjust parameters, integrate it into your projects, and explore its capabilities further.

For additional instructions or specific details, refer to the repository's README file. Enjoy exploring OmniMotion!

How does OmniMotion handle occlusions in video tracking?

OmniMotion excels at tracking through occlusions by maintaining a global representation of motion across an entire video sequence. This approach enables the tool to handle complex scenarios where objects in the frame may temporarily move behind each other or go out of view. By utilizing a quasi-3D canonical volume, OmniMotion represents the motion in a globally consistent manner, allowing for accurate tracking through occlusions that challenge traditional pairwise optical flow methods.

What technical innovations make OmniMotion unique in motion tracking?

OmniMotion introduces several technical innovations that set it apart in the field of motion tracking. Key among them is the use of a quasi-3D canonical volume for video representation, which captures motion information across frames to ensure global consistency. Additionally, pixel-wise tracking is achieved through bijections between local and canonical spaces, providing accurate results even during occlusions. This method accommodates diverse combinations of camera and object motions, and its implementation in PyTorch is openly accessible for further exploration and integration.

What can visitors expect to see in the OmniMotion interactive demo?

Visitors to the OmniMotion interactive demo can expect an engaging exploration of pixel correspondences across video frames. By clicking on any location in a query frame, users can observe how that point corresponds to a location in a target frame. The demo features a slider to switch between different target frames and a 'clear points' button to remove plotted points. Points identified as occluded are marked with crosses, while others are displayed as dots, offering a clear visualization of the correspondences tracked by OmniMotion.